- CLI Usage Examples (non-Docker)

CLI Usage Examples (non-Docker)

+ CLI Usage Examples: non-Docker

CLI Usage Examples: non-Docker

# make sure you have pip-installed ArchiveBox and it's available in your $PATH first

@@ -515,7 +514,7 @@ archivebox add --depth=1 'https://news.ycombinator.com'

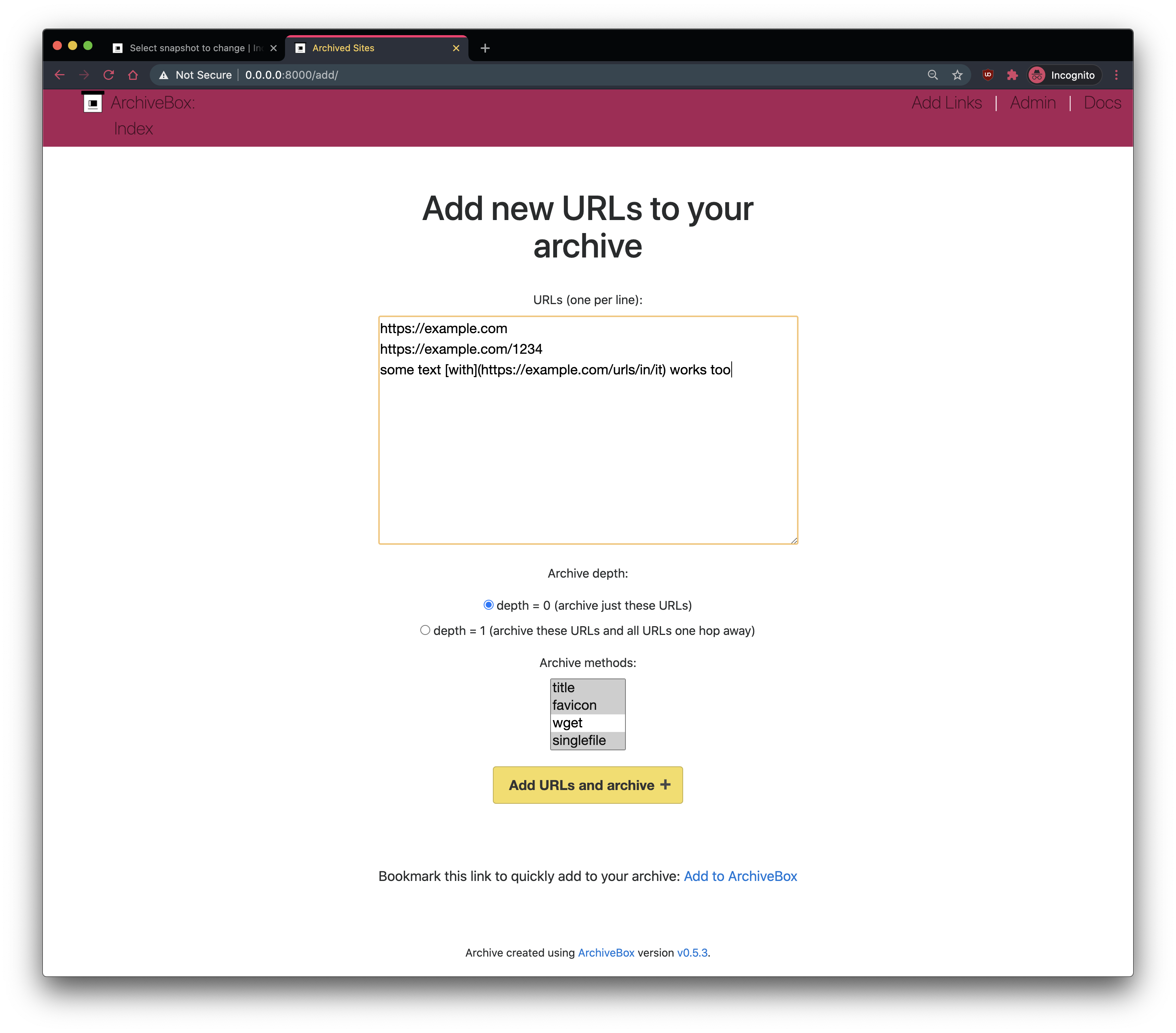

- Docker Compose CLI Usage Examples

Docker Compose CLI Usage Examples

+ CLI Usage Examples: Docker Compose

CLI Usage Examples: Docker Compose

# make sure you have `docker-compose.yml` from the Quickstart instructions first

@@ -533,7 +532,7 @@ docker compose run archivebox add --depth=1 'https://news.ycombinator.com'

- Docker CLI Usage Examples

Docker CLI Usage Examples

+ CLI Usage Examples: Docker

CLI Usage Examples: Docker

# make sure you create and cd into in a new empty directory first

@@ -655,13 +654,13 @@ docker run -it -v $PWD:/data archivebox/archivebox add --depth=1 'https://exampl

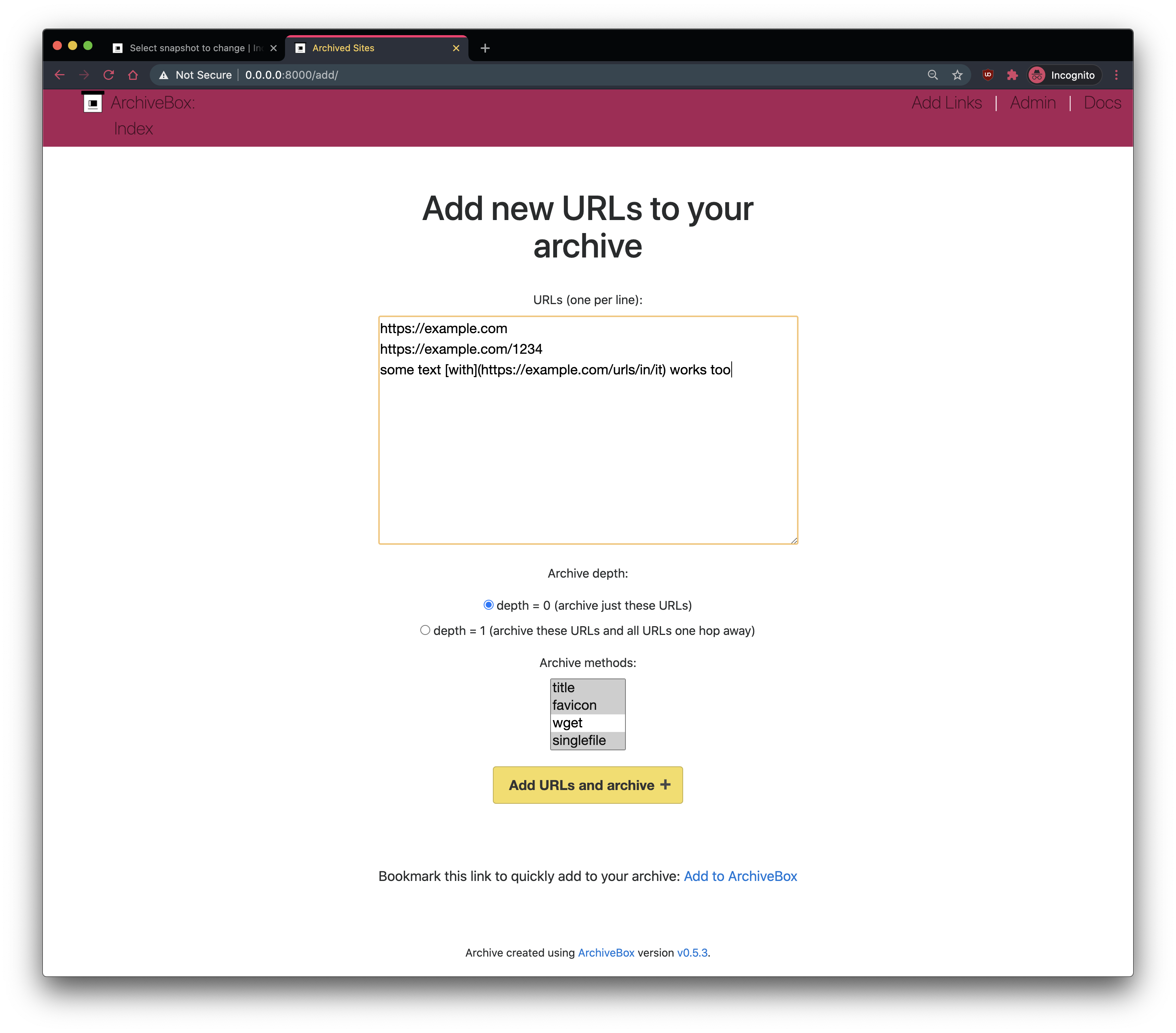

ArchiveBox supports injesting URLs in [any text-based format](https://github.com/ArchiveBox/ArchiveBox/wiki/Usage#Import-a-list-of-URLs-from-a-text-file).

-  From manually exported [browser history](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) or [browser bookmarks](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) (in Netscape format)

- See instructions for: Chrome, Firefox, Safari, IE, Opera, and more...

+ Instructions: Chrome, Firefox, Safari, IE, Opera, and more...

-

From manually exported [browser history](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) or [browser bookmarks](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) (in Netscape format)

- See instructions for: Chrome, Firefox, Safari, IE, Opera, and more...

+ Instructions: Chrome, Firefox, Safari, IE, Opera, and more...

-  From URLs visited through a [MITM Proxy](https://mitmproxy.org/) with [`archivebox-proxy`](https://github.com/ArchiveBox/archivebox-proxy)

Provides [realtime archiving](https://github.com/ArchiveBox/ArchiveBox/issues/577) of all traffic from any device going through the proxy.

-

From URLs visited through a [MITM Proxy](https://mitmproxy.org/) with [`archivebox-proxy`](https://github.com/ArchiveBox/archivebox-proxy)

Provides [realtime archiving](https://github.com/ArchiveBox/ArchiveBox/issues/577) of all traffic from any device going through the proxy.

-  From bookmarking services or social media (e.g. Twitter bookmarks, Reddit saved posts, etc.)

- See instructions for: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

+ Instructions: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

From bookmarking services or social media (e.g. Twitter bookmarks, Reddit saved posts, etc.)

- See instructions for: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

+ Instructions: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

@@ -1018,7 +1017,7 @@ For various reasons, many large sites (Reddit, Twitter, Cloudflare, etc.) active

@@ -1061,7 +1060,6 @@ Improved support for saving multiple snapshots of a single URL without this hash

@@ -1018,7 +1017,7 @@ For various reasons, many large sites (Reddit, Twitter, Cloudflare, etc.) active

@@ -1061,7 +1060,6 @@ Improved support for saving multiple snapshots of a single URL without this hash

-

### Storage Requirements

Because ArchiveBox is designed to ingest a large volume of URLs with multiple copies of each URL stored by different 3rd-party tools, it can be quite disk-space intensive. There are also some special requirements when using filesystems like NFS/SMB/FUSE.

@@ -1071,17 +1069,16 @@ Because ArchiveBox is designed to ingest a large volume of URLs with multiple co

Click to learn more about ArchiveBox's filesystem and hosting requirements...

-

-**ArchiveBox can use anywhere from ~1gb per 1000 articles, to ~50gb per 1000 articles**, mostly dependent on whether you're saving audio & video using `SAVE_MEDIA=True` and whether you lower `MEDIA_MAX_SIZE=750mb`.

-

-Disk usage can be reduced by using a compressed/deduplicated filesystem like ZFS/BTRFS, or by turning off extractors methods you don't need. You can also deduplicate content with a tool like [fdupes](https://github.com/adrianlopezroche/fdupes) or [rdfind](https://github.com/pauldreik/rdfind).

-

-**Don't store large collections on older filesystems like EXT3/FAT** as they may not be able to handle more than 50k directory entries in the `data/archive/` folder.

-

-**Try to keep the `data/index.sqlite3` file on local drive (not a network mount)** or SSD for maximum performance, however the `data/archive/` folder can be on a network mount or slower HDD.

-

-If using Docker or NFS/SMB/FUSE for the `data/archive/` folder, you may need to set [`PUID` & `PGID`](https://github.com/ArchiveBox/ArchiveBox/wiki/Configuration#puid--pgid) and [disable `root_squash`](https://github.com/ArchiveBox/ArchiveBox/issues/1304) on your fileshare server.

-

+

+- ArchiveBox can use anywhere from ~1gb per 1000 Snapshots, to ~50gb per 1000 Snapshots, mostly dependent on whether you're saving audio & video using

SAVE_MEDIA=True and whether you lower MEDIA_MAX_SIZE=750mb.

+- Disk usage can be reduced by using a compressed/deduplicated filesystem like ZFS/BTRFS, or by turning off extractors methods you don't need. You can also deduplicate content with a tool like

fdupes or rdfind.

+

+- Don't store large collections on older filesystems like EXT3/FAT as they may not be able to handle more than 50k directory entries in the

data/archive/ folder.

+

+- Try to keep the

data/index.sqlite3 file on local drive (not a network mount) or SSD for maximum performance, however the data/archive/ folder can be on a network mount or slower HDD.

+- If using Docker or NFS/SMB/FUSE for the `data/archive/` folder, you may need to set

PUID & PGID and disable root_squash on your fileshare server.

+

+

Learn More

@@ -1163,19 +1160,23 @@ ArchiveBox aims to enable more of the internet to be saved from deterioration by

Vast treasure troves of knowledge are lost every day on the internet to link rot. As a society, we have an imperative to preserve some important parts of that treasure, just like we preserve our books, paintings, and music in physical libraries long after the originals go out of print or fade into obscurity.

-Whether it's to resist censorship by saving articles before they get taken down or edited, or just to save a collection of early 2010's flash games you love to play, having the tools to archive internet content enables to you save the stuff you care most about before it disappears.

+Whether it's to resist censorship by saving news articles before they get taken down or edited, or just to save a collection of early 2010's flash games you loved to play, having the tools to archive internet content enables to you save the stuff you care most about before it disappears.

+The balance between the permanence and ephemeral nature of content on the internet is part of what makes it beautiful. I don't think everything should be preserved in an automated fashion--making all content permanent and never removable, but I do think people should be able to decide for themselves and effectively archive specific content that they care about, just like libraries do. Without the work of archivists saving physical books, manuscrips, and paintings we wouldn't have any knowledge of our ancestors' history. I believe archiving the web is just as important to provide the same benefit to future generations.

-The balance between the permanence and ephemeral nature of content on the internet is part of what makes it beautiful. I don't think everything should be preserved in an automated fashion--making all content permanent and never removable, but I do think people should be able to decide for themselves and effectively archive specific content that they care about.

+ArchiveBox's stance is that duplication of other people's content is only ethical if it:

-Because modern websites are complicated and often rely on dynamic content,

-ArchiveBox archives the sites in **several different formats** beyond what public archiving services like Archive.org/Archive.is save. Using multiple methods and the market-dominant browser to execute JS ensures we can save even the most complex, finicky websites in at least a few high-quality, long-term data formats.

+- A. doesn't deprive the original creators of revenue and

+- B. is responsibly curated by an individual/institution.

+In the U.S., libraries, researchers, and archivists are allowed to duplicate copyrighted materials under "fair use" for private study, scholarship, or research. Archive.org's preservation work is covered under this exemption, as they are as a non-profit providing public service, and they respond to unethical content/DMCA/GDPR removal requests.

+

+As long as you A. don't try to profit off pirating copyrighted content and B. have processes in place to respond to removal requests, many countries allow you to use sofware like ArchiveBox to ethically and responsibly archive any web content you can view. That being said, ArchiveBox is not liable for how you choose to operate the software. You must research your own local laws and regulations, and get proper legal council if you plan to host a public instance (start by putting your DMCA/GDPR contact info in FOOTER_INFO and changing your instance's branding using CUSTOM_TEMPLATES_DIR).

@@ -1188,7 +1189,7 @@ ArchiveBox archives the sites in **several different formats** beyond what publi

> **Check out our [community wiki](https://github.com/ArchiveBox/ArchiveBox/wiki/Web-Archiving-Community) for a list of web archiving tools and orgs.**

-A variety of open and closed-source archiving projects exist, but few provide a nice UI and CLI to manage a large, high-fidelity archive collection over time.

+A variety of open and closed-source archiving projects exist, but few provide a nice UI and CLI to manage a large, high-fidelity collection over time.

@@ -1576,10 +1577,10 @@ Extractors take the URL of a page to archive, write their output to the filesyst

-- [ArchiveBox.io Homepage](https://archivebox.io) / [Source Code (Github)](https://github.com/ArchiveBox/ArchiveBox) / [Demo Server](https://demo.archivebox.io)

-- [Documentation Wiki](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs](https://docs.archivebox.io) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

-- [Bug Tracker](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

-- Find us on social media: [Twitter](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

+- [ArchiveBox.io Website](https://archivebox.io) / [ArchiveBox Github (Source Code)](https://github.com/ArchiveBox/ArchiveBox) / [ArchiveBox Demo Server](https://demo.archivebox.io)

+- [Documentation (Github Wiki)](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs (ReadTheDocs)](https://docs.archivebox.io) / [Roadmap](https://github.com/ArchiveBox/ArchiveBox/wiki/Roadmap) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

+- [Bug Tracker (Github Issues)](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions (Github Discussions)](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

+- Find us on social media: [Twitter `@ArchiveBoxApp`](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

---

@@ -1598,7 +1599,7 @@ Extractors take the URL of a page to archive, write their output to the filesyst

-- [ArchiveBox.io Homepage](https://archivebox.io) / [Source Code (Github)](https://github.com/ArchiveBox/ArchiveBox) / [Demo Server](https://demo.archivebox.io)

-- [Documentation Wiki](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs](https://docs.archivebox.io) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

-- [Bug Tracker](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

-- Find us on social media: [Twitter](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

+- [ArchiveBox.io Website](https://archivebox.io) / [ArchiveBox Github (Source Code)](https://github.com/ArchiveBox/ArchiveBox) / [ArchiveBox Demo Server](https://demo.archivebox.io)

+- [Documentation (Github Wiki)](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs (ReadTheDocs)](https://docs.archivebox.io) / [Roadmap](https://github.com/ArchiveBox/ArchiveBox/wiki/Roadmap) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

+- [Bug Tracker (Github Issues)](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions (Github Discussions)](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

+- Find us on social media: [Twitter `@ArchiveBoxApp`](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

---

@@ -1598,7 +1599,7 @@ Extractors take the URL of a page to archive, write their output to the filesyst

-ArchiveBox was started by Nick Sweeting in 2017, and has grown steadily with help from our amazing contributors.

+ArchiveBox was started by Nick Sweeting in 2017, and has grown steadily with help from our amazing contributors.

✨ Have spare CPU/disk/bandwidth after all your 网站存档爬 and want to help the world?

Check out our Good Karma Kit...

diff --git a/archivebox/extractors/singlefile.py b/archivebox/extractors/singlefile.py

index 553c9f8d..1d5275dd 100644

--- a/archivebox/extractors/singlefile.py

+++ b/archivebox/extractors/singlefile.py

@@ -21,6 +21,7 @@ from ..config import (

SINGLEFILE_ARGS,

SINGLEFILE_EXTRA_ARGS,

CHROME_BINARY,

+ COOKIES_FILE,

)

from ..logging_util import TimedProgress

@@ -50,10 +51,11 @@ def save_singlefile(link: Link, out_dir: Optional[Path]=None, timeout: int=TIMEO

browser_args = '--browser-args={}'.format(json.dumps(browser_args[1:]))

# later options take precedence

options = [

+ '--browser-executable-path={}'.format(CHROME_BINARY),

+ *(["--browser-cookies-file={}".format(COOKIES_FILE)] if COOKIES_FILE else []),

+ browser_args,

*SINGLEFILE_ARGS,

*SINGLEFILE_EXTRA_ARGS,

- browser_args,

- '--browser-executable-path={}'.format(CHROME_BINARY),

]

cmd = [

DEPENDENCIES['SINGLEFILE_BINARY']['path'],

CLI Usage Examples (non-Docker)

CLI Usage Examples (non-Docker) CLI Usage Examples: non-Docker

CLI Usage Examples: non-Docker Docker Compose CLI Usage Examples

Docker Compose CLI Usage Examples CLI Usage Examples: Docker Compose

CLI Usage Examples: Docker Compose Docker CLI Usage Examples

Docker CLI Usage Examples CLI Usage Examples: Docker

CLI Usage Examples: Docker From manually exported [browser history](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) or [browser bookmarks](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) (in Netscape format)

- See instructions for: Chrome, Firefox, Safari, IE, Opera, and more...

+ Instructions: Chrome, Firefox, Safari, IE, Opera, and more...

-

From manually exported [browser history](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) or [browser bookmarks](https://github.com/ArchiveBox/ArchiveBox/wiki/Quickstart#2-get-your-list-of-urls-to-archive) (in Netscape format)

- See instructions for: Chrome, Firefox, Safari, IE, Opera, and more...

+ Instructions: Chrome, Firefox, Safari, IE, Opera, and more...

-  From URLs visited through a [MITM Proxy](https://mitmproxy.org/) with [`archivebox-proxy`](https://github.com/ArchiveBox/archivebox-proxy)

Provides [realtime archiving](https://github.com/ArchiveBox/ArchiveBox/issues/577) of all traffic from any device going through the proxy.

-

From URLs visited through a [MITM Proxy](https://mitmproxy.org/) with [`archivebox-proxy`](https://github.com/ArchiveBox/archivebox-proxy)

Provides [realtime archiving](https://github.com/ArchiveBox/ArchiveBox/issues/577) of all traffic from any device going through the proxy.

-  From bookmarking services or social media (e.g. Twitter bookmarks, Reddit saved posts, etc.)

- See instructions for: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

+ Instructions: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

From bookmarking services or social media (e.g. Twitter bookmarks, Reddit saved posts, etc.)

- See instructions for: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

+ Instructions: Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, Firefox Sync, and more...

@@ -1018,7 +1017,7 @@ For various reasons, many large sites (Reddit, Twitter, Cloudflare, etc.) active

@@ -1018,7 +1017,7 @@ For various reasons, many large sites (Reddit, Twitter, Cloudflare, etc.) active

-- [ArchiveBox.io Homepage](https://archivebox.io) / [Source Code (Github)](https://github.com/ArchiveBox/ArchiveBox) / [Demo Server](https://demo.archivebox.io)

-- [Documentation Wiki](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs](https://docs.archivebox.io) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

-- [Bug Tracker](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

-- Find us on social media: [Twitter](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

+- [ArchiveBox.io Website](https://archivebox.io) / [ArchiveBox Github (Source Code)](https://github.com/ArchiveBox/ArchiveBox) / [ArchiveBox Demo Server](https://demo.archivebox.io)

+- [Documentation (Github Wiki)](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs (ReadTheDocs)](https://docs.archivebox.io) / [Roadmap](https://github.com/ArchiveBox/ArchiveBox/wiki/Roadmap) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

+- [Bug Tracker (Github Issues)](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions (Github Discussions)](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

+- Find us on social media: [Twitter `@ArchiveBoxApp`](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

---

@@ -1598,7 +1599,7 @@ Extractors take the URL of a page to archive, write their output to the filesyst

-- [ArchiveBox.io Homepage](https://archivebox.io) / [Source Code (Github)](https://github.com/ArchiveBox/ArchiveBox) / [Demo Server](https://demo.archivebox.io)

-- [Documentation Wiki](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs](https://docs.archivebox.io) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

-- [Bug Tracker](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

-- Find us on social media: [Twitter](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

+- [ArchiveBox.io Website](https://archivebox.io) / [ArchiveBox Github (Source Code)](https://github.com/ArchiveBox/ArchiveBox) / [ArchiveBox Demo Server](https://demo.archivebox.io)

+- [Documentation (Github Wiki)](https://github.com/ArchiveBox/ArchiveBox/wiki) / [API Reference Docs (ReadTheDocs)](https://docs.archivebox.io) / [Roadmap](https://github.com/ArchiveBox/ArchiveBox/wiki/Roadmap) / [Changelog](https://github.com/ArchiveBox/ArchiveBox/releases)

+- [Bug Tracker (Github Issues)](https://github.com/ArchiveBox/ArchiveBox/issues) / [Discussions (Github Discussions)](https://github.com/ArchiveBox/ArchiveBox/discussions) / [Community Chat Forum (Zulip)](https://zulip.archivebox.io)

+- Find us on social media: [Twitter `@ArchiveBoxApp`](https://twitter.com/ArchiveBoxApp), [LinkedIn](https://www.linkedin.com/company/archivebox/), [YouTube](https://www.youtube.com/@ArchiveBoxApp), [SaaSHub](https://www.saashub.com/archivebox), [Alternative.to](https://alternativeto.net/software/archivebox/about/), [Reddit](https://www.reddit.com/r/ArchiveBox/)

---

@@ -1598,7 +1599,7 @@ Extractors take the URL of a page to archive, write their output to the filesyst